Analysing the status of an AI model with regard to the GDPR

05 January 2026

AI models may be subjected to the GDPR if they store personal data from their training process. The CNIL proposes a method for providers to assess whether or not their models are subjected to the GDPR.

AI models can be anonymous; the GDPR does not then apply to them.

In some cases, AI models store part of the data used for their training; it then becomes possible to extract personal data from them, if some were present in the training set. When this extraction takes place using means that are reasonably likely to be used, these models fall within the enforcement scope of the GDPR. The CNIL is helping model providers to determine whether or not this is the case.

Introduction and scope of the sheet

Purpose of the sheet

This how-to sheet, intended for providers, details the methodology for assessing and documenting the likelihood of re-identification of natural persons/individuals from an AI model trained on personal data or an AI system based on a model that cannot be considered anonymous.

Once this analysis has been carried out, unless the likelihood is insignificant, the GDPR will apply to the processing operations relating to the model or system. In this how-to sheet, the status of a model or the use of a system will be referred to as the conclusion of the analysis with regard to the applicability of the GDPR.

This analysis will make it possible, when appropriate, to draw the consequences of the application of the GDPR, in particular through a precise/accurate assessment of the likelihood of re-identification associated with each type of personal data in the training database. A future practical sheet will specify the consequences of the GDPR enforcement to a processing operation involving an AI model.

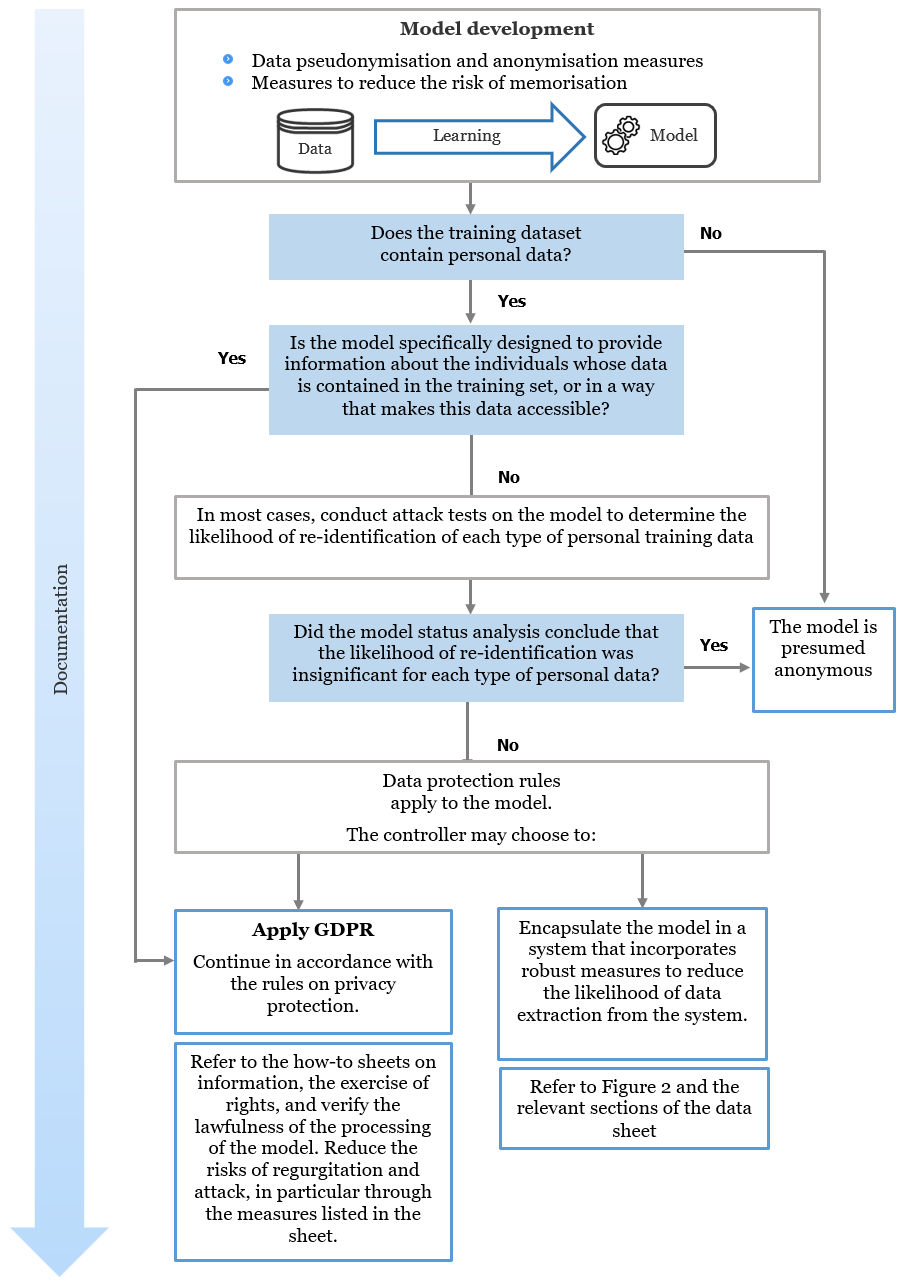

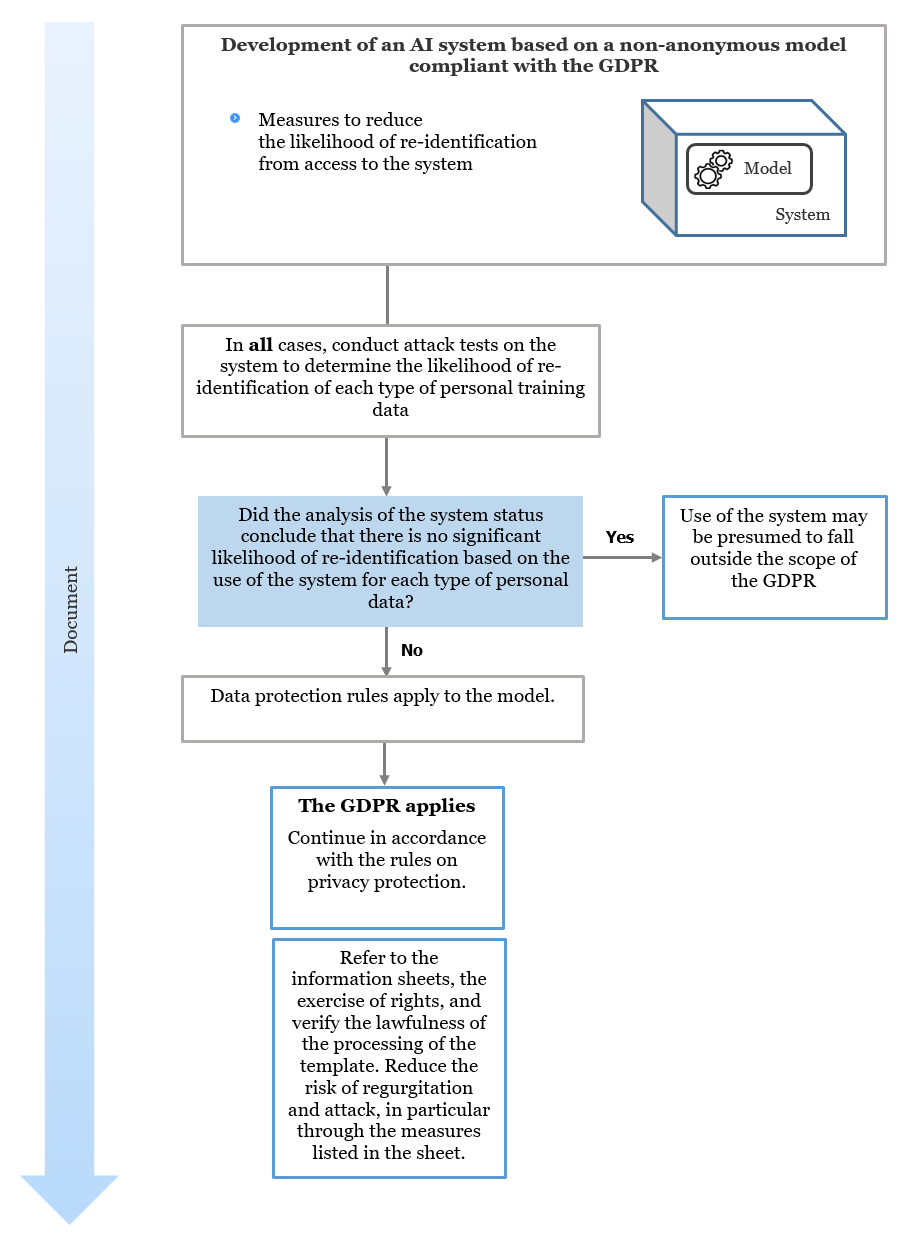

The two figures below summarise the conduct of the analysis in the following two situations:

- Figure 1: Managing the analysis as a provider or representative of the status of an AI model;

- Figure 2: Managing the analysis as a deployer of the status of an AI system based on a non-anonymous model.

Figure 1 - Managing the status analysis of an AI model

Figure 2 - Managing a status analysis of an AI system based on a non-anonymous model

AI models and systems concerned

An AI model is a statistical representation of the characteristics of the database used to train it. Numerous academic studies have shown that, in certain cases, this representation is acute enough to lead to the disclosure of certain training data. This reconstitution can take the form of data regurgitation during use in the case of generative AI systems, or following an attack such as those described in the LINC article "Small taxonomy of attacks on AI systems". As specified in the opinion of the European Data Protection Board (EDPB) (see box), where it is possible, intentionally or unintentionally, for an AI model or system to regurgitate or be the subject of extractions of personal data using means that are reasonably likely to be used, the GDPR enforces to processings concerning the AI model or system.

Status analysis therefore concerns any AI model trained on personal data or any AI system that incorporates a non-anonymous AI model. If the status analysis of an AI model concludes that it is excluded from the enforcement scope of the GDPR, then an AI system based exclusively on that model is also excluded.

Necessity to analyse the status of the AI model or system

Any data controller must adequately document the compliance of its personal data processing (in line with the principle of accountability), for example in a data protection impact assessment.

So then, when an AI model is trained using a database containing personal data, an analysis must be carried out systematically to determine whether the GDPR enforces to the AI model in order, where appropriate, to draw the consequences.

The model provider (to whom this how-to sheet is addressed) will most often be responsible for this development processing since that it determines the purpose and characteristics of the model (its purpose, functionalities, deployment context, etc.) as well as the personal training data. When the latter concludes that the AI model is not subjected to the GDPR, the documentation of the analysis of the model's status must be able to be presented to the data protection authorities, such as the CNIL. It must demonstrate that the likelihood of re-identification of natural persons whose data is contained in the training base from the model or system is insignificant.

The documentation must therefore detail the measures taken when training the model in order to limit the likelihood of re-identification from access to it, and in most cases, include the results of re-identification attack tests.

Where an AI model cannot be considered anonymous, consideration may be given to mitigating the likelihood of re-identification of individuals, by integrating it into an AI system that implements robust measures to prevent its potential extraction. In some cases, these measures may allow to remove the use of the system from the enforcement scope of the GDPR. The provider of such a system will therefore have to conduct and document its analysis, which must, in all cases, include the results of tests of re-identification attacks on the system.

When an AI system provider integrating a non-anonymous AI model claims that its use is no longer subjected to the GDPR, the CNIL recommends sharing or publishing sufficient documentation to allow its users to verify this status, and thus demonstrate that they are not processing personal data contained in the model. For providers of AI models analysed as anonymous, sharing this analysis is a good practice.

For the supplying of a non-anonymous AI model by its provider, the applicable obligations (including documentation) will be detailed in a later sheet. They will also apply when the model concerned is shared with a third party who intends to encapsulate it in a system to remove it from the scope of the GDPR.

Focus on EDPB Opinion 28/2024 on some data protection aspects of the processing of personal data in the context of AI models

The EDPB has adopted an opinion on the use of personal data for the development and deployment of AI models. The opinion examines 1) when and how AI models can be considered as anonymous, 2) whether and how legitimate interest can be used as a legal basis and 3) the consequences under the GDPR when an AI model is developed using personal data that has been unlawfully processed.

With regard to the status of AI models, the opinion reiterates that the question of whether a model is anonymous must be assessed on a case-by-case basis by the data protection authorities. In particular, it states:

- that a model specifically designed to produce or infer information about natural persons contained in its training set therefore contains personal data. It is subject to the GDPR.

- that, for a model trained in particular on personal data, but which is not specifically designed to produce or infer information about such individuals, to be anonymous, it must be highly unlikely (1) for someone to directly or indirectly identify the individuals whose data has been used to create the model on the basis of the parameters of the model ("white-box attacks"), and (2) to extract such personal data from the model through queries.

The notice provides a non-prescriptive and non-exhaustive list of methods for demonstrating anonymity on which this method sheet is based.

Consequences when models or AI systems are not considered anonymous

The provider of an AI model or system subjected to the GDPR will have to comply with its obligations under the GDPR and, in France, the Data Protection Act.

The same will apply to any player in the chain (other AI system providers, distributors, deployers, etc.) who will have to comply with GDPR obligations when handling or using them.

This will involve ensuring the legality of the processing, informing individuals, enabling them to exercise their rights, guaranteeing the security of the model, etc.

In practice, compliance of the model with the GDPR relies heavily on the provider, an upcoming sheet will help players to determine their obligations. The response to these requirements, and the relevant level of guarantees, will depend on the nature of the personal data contained in the model and likely to be extracted from it.

A word of caution: it is possible that the GDPR may apply to the use of an AI model or system that is nevertheless considered anonymous by its provider. This situation is discussed at the end of this how-to sheet.

Conducting the analysis

Documenting the status analysis of an AI model or a system based on a non-anonymous model

This section aims to guide the data controller in documenting the status analysis of an AI model or the use of a non-anonymous model-based system. The following table provides a non-exhaustive list of information to be included in the documentation of a model or system.

| Type of information | Document | Condition for including information in status analysis documentation | |

|---|---|---|---|

| Case of an AI model trained on personal data | Case of the use of an AI system based on a non-anonymous AI model | ||

| General Governance | Any information relating to a DPIA, including any assessment or decision that led to the conclusion that the DPIA was not necessary. | As soon as the information exists | As soon as the information exists |

| Any advice or opinions given by the Data Protection Officer (DPO) (where a DPO has or should have been appointed) | As soon as the information exists | As soon as the information exists | |

| Documentation of residual risks | Including, where applicable, the documentation that is intended to be sent to the data controller deploying the system and/or to the data subjects, in particular, documentation concerning the measures taken to reduce the likelihood of re-identification and concerning possible residual risks | Including, where applicable, the documentation that is intended to be provided to the data controller deploying the system by its provider. | |

| Technical governance | Any information on the technical and organisational measures taken at the design stage to reduce the likelihood of re-identification, including scenarios by a broad set of attackers (from the weakest to the strongest) and the risk assessment on which these measures are based. | Including the specific measures taken for each source or type of source of training data, including where relevant the URLs of the sources on which these measures have been taken by the data controller (or already taken by the distributor in the case of data made available by a third party). | Including the specific measures taken for each source or type of data source stored by the model, including where relevant the URLs of the sources on which these measures have been taken by the data controller (or already taken by the distributor in the case of a model made available by a third party). |

| The technical and operational measures taken at any stage of the lifecycle which have either: (i) contributed to or; (ii) verified the absence of personal data in the model or through the use of the system. | As soon as the information exists | As soon as the information exists | |

| Resistance to attacks | Preliminary documentation demonstrating speculative resistance to re-identification techniques. | As soon as the information exists, this may include in particular a theoretical analysis with regard to the state of the art (for example taking into account the ratio between the quantity of training data and the size of the model, or which aims to demonstrate the intrinsic capacity of a model to memorise data). | As soon as the information is available, it may include in particular a theoretical analysis with regard to the state of the art (e.g. taking into account the existence of methods enabling measures such as output filters to be got around) |

|

The results of ex-post re-identification attack tests:

|

In most cases, determined by a combination of clues. See Characterising the necessity to carry out the re-identification attack test |

In all cases. | |

Characterising the necessity to test for re-identification attacks on an AI model

A set of indicators can be used to assess whether it is necessary to carry out a re-identification attack test to determine the status of the model. It is up to the data controller to assess the risk revealed by one or more of these indicators. Sometimes, a single indicator is enough to show that the risk of memorization is high, but in other cases, this same indicator may be less decisive. In these cases, the other criteria would need to be examined. This list of indicators is for information purposes only and may change in the light of the state of the art and scientific understanding of the phenomenon of memorisation in models.

The lack of presence of these indicators does not provide any certainty about the lack of memorisation, nor does it ever rule out the need for a re-identification attack test: this implies that a more detailed analysis should be carried out.

For example, in the case of generative AI, the regurgitation of certain training data, not necessarily personal, can sometimes be demonstrated by rapid tests (such as very specific prompts). This test leads to a positive conclusion about memorisation, but does not rule it out if no data is regurgitated. The same applies to the criteria listed below: when an indication is not verified, it cannot be considered individually to have a sufficiently low likelihood of re-identification, and the others one must also be considered.

| Indicators of evidence of the presence of personal data in the model |

|---|

| Indicators relating to the training data set |

|

The identifying and precise nature of the data in the training set, such as the presence of surnames, first names, facial photographs, voice extracts, addresses or exact dates of birth. To mitigate this: Consider deleting identifying information, or as far as possible, using data generalisation, aggregation, random perturbation or pseudonymisation technologies. |

|

Data variety, or the presence of rare or outlier data, corresponding to unique individuals or individuals whose statistical characteristics are in minority in the training database. These data, by falling outside the theoretical statistical distribution of the training data, are more likely to cause overfitting. During the training of the model, they will tend to be memorised first. To mitigate this: carrying out a statistical pre-analysis of the training dataset to identify and then remove rare or outlier data, or perform aggregations to smooth the statistical distribution of the training data. |

|

The duplication of training data, i.e. the presence of exact or approximate repetitions in the training data, as this is often a factor leading to overfitting and memorising of this data. By being represented several times in the theoretical statistical distribution of training data, duplicated personal data will tend to be memorised first. To mitigate this: clean up the training data set to remove both exact and approximate duplicates (deduplication). |

| Indicators relating to the architecture of the model and its training |

|

The large number of model parameters in relation to the volume of training data. A larger number of parameters will tend to enable finer modelling of the training data and thus learning about the characteristics of the data and not just their statistical distribution. It should be noted that the limit for the number of parameters indicating a high risk of memorization should be determined according to the context, and in particular the functionality of the model or the volume of training data. To mitigate this: Use models with fewer parameters, particularly on the basis of the state of the art. |

|

Potential overfitting, using a relevant metric, i.e. the fact that a model has learned a statistical distribution that is too close to the training data. To mitigate this:

|

|

Absence of confidentiality guarantees in the training algorithm, such as differential privacy. To mitigate this: Implement measures to limit the impact of individual data points on the training, such as: algorithms that guarantee acceptable levels of differential privacy (for example, by adding noise to the gradient in the optimisation of the model's cost function). |

|

The use of personal data when fine-tuning and transfer learning the model. This phase, in the same way as the ex nihilo training of a model can lead to the memorisation of training data. To mitigate this risk: consider making fine-tuning data anonymous, or using algorithms that provide formal guarantees of confidentiality for adjustment (expressed, for example, in terms of differential confidentiality). |

| Indications relating to the functions and uses of the model |

|

Functionalities aimed at reproducing data similar to the training data, such as content generation or textual data synthesis. In the particular case of generative AI, the generation by prompt of (not necessarily personal) data (text, image, audio), presumably relating to the training data. |

| Successful attacks on the type of model developed in a comparable context, as documented by scientific publications or press articles. |

The examples below are intended to illustrate the practical implementation of this set of indicators:

- A large language model is trained by an organisation on colossal textual datasets freely available on the Internet. The organisation reused these data sets as they were, without modifying the content. The presence of personal data in the datasets can be suspected given the wide variety of sources from which they come, the absence of measures to anonymise them, and the functionality of the model. This set of indicators leads us to conclude that it is necessary to carry out a re-identification attack test.

- A model used in the field of environmental health to predict the risk of a population exposed to certain atmospheric pollutants developing lung cancer is trained on patients' geographical data, medical history and lifestyle habits. As certain areas in the region studied are under-represented in the database, the data from certain patients constitute rare data, or outliers, in the geographical distribution of the cohort as a whole. In view of the risk that an attacker could use the prediction scores obtained by making inferences on the model trained on these people's data to know whether they have lung cancer, the criterion corresponding to the presence of rare data is deemed sufficient to consider the management of the re-identification attack test necessary.

- An energy consumption database collected from several households is used to train a neural network to recognise the domestic appliances used. If an appliance is only found in a small number of these households (this is rare data), the trained neural network could be significantly more confident in its predictions when used on data from households in the learning base that have the appliance. This difference can be used to determine the households in which the collection took place (membership inference attack). Two criteria are met here: the presence of rare data and the use of a model with a large number of parameters. Re-identification attack testing is deemed necessary.

Carrying out re-identification attack tests on an AI model

In most cases, particularly on the basis of the previous set of indicators, it will be necessary to subject an AI model trained on personal data to re-identification attacks, which may constitute means reasonably likely to be used to re-identify individuals. The purpose of these attack tests is to estimate the likelihood of re-identification of individuals based on the model. To conclude that the model is anonymous, the likelihood of extraction by reasonable means must be insignificant.

Determining the means reasonably likely to be used to extract data from an AI model

Characterising the means reasonably likely to be used by the controller or any other person is an essential step in analysing the status of the model. This characterisation must be based on objective criteria, which may include:

- additional information which would allow re-identification, and which would be accessible to the person;

- the cost and time required for such a person to obtain this additional information;

- the state of the art of technology available and in development, in particular concerning techniques for extracting data from AI models.

The assessment of the means reasonably likely to be used must take into account the possibility of access to the model not only by the data controller, but also by third parties who should not have had access to it. Although measures to reduce the likelihood of personal data being extracted can be taken during both the development and deployment of an AI model, the assessment of the anonymity of the model must also take into account the possibility of direct access to it.

Simply restricting access to the data (and/or the model) does not systematically guarantee its anonymity. However, limited access can reduce the likelihood of re-identification (without automatically make it insignificant). The means reasonably likely to be used to extract personal data may depend on the context in which a model is developed and deployed. As a result, the levels of testing and resistance to attack required may vary according to this context. The conclusion of the analysis may therefore be different between a model that is publicly accessible to an unlimited number of users and a model that is internal to a company with access limited to only a few employees, as it is detailed later in this document on the analysis of AI systems based on non-anonymous models.

The criteria set out above take account of the technical specificities of AI models and the way they are designed. They cannot therefore be automatically transposed to the analysis of anonymisation in other fields. Furthermore, legal and contractual guarantees aimed at limiting the access or use of a model do not replace the anonymisation techniques that could be implemented on the training dataset or during the learning phase, but complement them.

AI model testing and resistance to attack

The following list provides criteria that can be used to assess the likelihood of an AI model trained on such data being extracted from personal data using attack techniques.

The relevance of the scope, frequency, quantity and quality of the data extraction tests that the data controller has carried out on the model must be assessed in the light of the state of the art, as well as the means reasonably likely to be used in relation to the model. These tests may include in particular:

- Training data regurgitation tests, in the case of generative AI models;

- Membership inference attacks;

- Exfiltration attacks;

- Model inversion attacks;

- Reconstruction attacks;

- A measure of the intrinsic memory capacity of the model architecture.

It should be noted that resistance to a technique implementing one of these types of attack does not prejudge resistance to another technique.

Recommendations concerning the management of tests and attacks

Certain types of attack presented above require demanding technical knowledge and resources to be implemented. It is therefore recommended that attacks on the AI model should be carried out with increasing difficulty of implementation. When an attack allows personal data to be extracted from the AI model, the type of personal data concerned by this extraction must be determined. The data controller can then choose to:

- Stop the analysis at this stage, considering that all the data types present in the training set can be extracted with the likelihood given by the easiest attack having succeeded on a particular type;

- Continue the analysis by managing all the tests and attacks constituting means reasonably likely to be used, in order to establish the likelihood of extraction associated with each type of training data. More in-depth re-identification tests may, for example, make it possible to separate the likelihood of extraction from pre-training and fine-tuning data, which may present different risks for the individuals concerned.

Example: The data office of a hospital wants to develop a large language model to help write medical consultation reports. To do this, it pre-trains a large language model on the basis of publicly accessible data (to equip it with certain textual skills), then carries out a fine tuning phase on the basis of a dataset of previously pseudonymised medical reports (to specialise in this type of text). After the fine-tuning phase on sensitive personal data, the indicators of evidence concluded that it was necessary to conduct re-identification attack tests. During the testing and attack phase, the data controller begins by performing simple queries on the model in an attempt to extract training data. The result is that simple queries can be used to extract personal data from the pre-training dataset. At this stage, the data controller may:

- Stop conducting the analysis, and considering in the consequences of the application of the GDPR that all types of training data, including sensitive data from the fine-tuning phase but which have not been extracted at this stage of the analysis, can be extracted from the model with simple attacks, and therefore, a high likelihood;

- Continue the analysis using attacks that are more difficult to implement, in order to accurately assess the likelihood of extraction of sensitive data, in order to refine the analysis of the consequences of applying the GDPR. The guarantees to be implemented will not be the same depending on whether the personal data contained in the model is publicly accessible data or confidential and sensitive data from the adjustment phase.

Once this analysis has been carried out, the data controller will be able to determine whether the GDPR applies to its model. If this proves to be the case, various consequences may follow. A future how-to sheet will specify the consequences of the application of the GDPR to processings concerning an AI model in the near future.

Reducing the likelihood of re-identification from an AI system based on a non-anonymous model

When an AI system is based on an AI model that falls within the scope of the GDPR, it is possible to put in place measures which, if sufficiently effective and robust, could make it possible to render the likelihood of re-identification of individuals insignificant. The use of the latter could then fall outside the scope of the GDPR, subject to the results of the analysis to be carried out. To do this, the provider of the AI system that it plans to anonymise will have to assess the likelihood of re-identification, in particular on the basis of attack tests on the system. This section is intended to guide this analysis.

Please note: The following developments are not transposable to other forms of anonymisation analysis.

The following list details measures that can reduce the likelihood of re-identification from an AI system incorporating a model for which this likelihood is not insignificant. This list is indicative, and does not prejudge the existence of other sufficiently robust measures to reduce this likelihood.

| Measures reducing the likelihood of re-identification at system level |

|---|

| Impossibility of direct access or recovery of the model from interactions with the system. It must be impossible for any user of the system, even a malicious one, to access the model directly or to recover it using any means reasonably likely to be used. |

|

Restrictions on access to the system, such as:

|

|

Modifications made to the model's outputs to limit the risk of re-identification of individuals from access to the system by its users. For example, it is possible to:

|

|

Security measures designed to prevent or detect attempted attacks, such as encrypting the model, logging accesses, modifications and uses of the model, or using strong authentication methods. |

Carrying out re-identification attack tests on an AI system based on a non-anonymous model

The aim of these tests is to estimate the likelihood of personal data being extracted from use of the system, and from any means reasonably likely to be used, including attack methods whose result is probabilistic. In order to consider that the use of the AI system does not fall within the scope of the GDPR, this likelihood of extraction must be insignificant.

Determining the means reasonably likely to be used to extract data from an AI system

As with the analysis of the status of models, the characterisation of the means reasonably likely to be used is an essential step in managing the analysis of the status of the use of the system. This characterisation must be based on objective criteria, which may include:

- the context in which the AI system is deployed, which may include access limitations as well as legal protections;

- the extent of access to the internal workings of the model through use of the system;

- additional information that would enable re-identification, and that would be accessible to the individual, including beyond publicly available sources;

- the cost and time required for such a person to obtain this additional information;

- the state-of-the-art technology available and in development, in particular concerning techniques for extracting data from AI systems.

The assessment of the means reasonably likely to be used must take account of the possibility of access to the system by any person, considering the case of persons who should not have had access. It should be noted that simply restricting access to the model and/or the system does not systematically guarantee their anonymity. Restricted access may reduce the likelihood of re-identification (without, however, automatically rendering it insignificant). Furthermore, legal and contractual guarantees aimed at limiting access to or use of a model do not replace anonymisation techniques, but can complement them. As a result, the levels of testing and resistance to attack required may vary according to this context. The conclusion of the analysis may therefore be different between an AI system that is publicly accessible to an unlimited number of users and one that is internal to a company with access limited to a small number of employees, although this is no substitute for minimising the likelihood of personal data being extracted from the model.

AI system testing and resistance to attack

This section aims to guide the assessment of the likelihood of re-identification by attacks, through the use of the system by any person.

The appropriateness of the scope, frequency, quantity and quality of the data extraction tests that the controller has carried out on the system must be assessed in the light of the state of the art, as well as the means reasonably likely to be used to re-identify individuals from use of the system, including in the near future. These tests may include in particular:

- Tests aimed at obtaining partial or direct access to the AI model through use of the system;

- Training data regurgitation tests, in the case of generative AI models;

- Membership inference attacks;

- Exfiltration attacks;

- Model inversion attacks;

- Reconstruction attacks.

It should be noted that resistance to a technique implementing one of these types of attack does not prejudge resistance to another technique.

Recommendations concerning the management of tests and attacks

Certain types of attack presented above require demanding technical knowledge and resources to be implemented. It is therefore recommended that attacks on the AI system should be carried out with increasing difficulty of implementation. When an attack enables personal data to be extracted from the AI system, the type of personal data concerned by this extraction must be determined. The data controller may then choose to:

- Stop the analysis at this stage, considering that all the data types present in the model can be extracted with the likelihood given by the easiest attack having succeeded on a particular type;

- Continue the analysis by carrying out all the tests and attacks that are reasonably likely to be used, in order to establish the likelihood of extraction associated with each type of training data. More in-depth re-identification tests may, for example, make it possible to separate the likelihood of extraction from pre-training and adjustment data, which may present different risks for the individuals concerned.

Cases where AI models or systems incorporating them are wrongly excluded from the scope of the GDPR

Re-identification of individuals from a model may occur when the provider had considered its likelihood to be insignificant, for example if the provider was unaware of a vulnerability or because of improvements in data extraction techniques.

For this reason, as part of their security obligations, the CNIL recommends that providers regularly check the validity of their analysis (taking into account developments in the state of the art), and anticipate any data breaches that may occur.

It is good practice to provide a means for users to report incidents (e.g. via a function in the system interface, a contact form or model version management). This practice complements the obligations of the European AI Act (AIA), which provides for post-market surveillance of providers of high-risk AI systems (article 72 of the AIA) and for the reporting of these risks by the deployers of such systems (article 26 of the AIA).

Where an extraction of personal data takes place, any exploitation of the vulnerability should be prevented and, where possible, consideration should be given to whether the re-identification vulnerability has been exploited, and by whom. The provider will also have to consider whether there has been a data breach (within the meaning of Article 33 of the GDPR) and to draw the consequences, in particular to document this data breach (including the nature of the data, the method of distribution and the resulting consequences), to notify the CNIL within 72 hours if this breach is likely to give rise to risks for individuals, and even to inform individuals if the risks in question are high (Article 34 of the GDPR), by documenting.

Please note: The fact that the extraction of personal data is described as a data breach does not necessarily mean that the provider is at fault. For example, the provider may not be held liable if it has concluded that its model is anonymous by carrying out a sufficient and properly documented analysis, and that an extraction is subsequently made possible by an unforeseeable change in the state of the art, but that the provider reacts correctly to this data breach by properly fulfilling its obligations in this area.

Depending on the seriousness of the incident, and any resulting breaches of the GDPR, the CNIL may require to re-train or remove the model in question.